We had some fun at my ‘Raumkontrolle’ block seminar, trying out our Netavis Observer ‘face recognition’ software on various anti-Anti-Facial Recognition strategies. Seems they all used the same (Open-CV?) algorithm, and while I’m sure these strategies work against that, our (not very reliable) software looked right through the make-up and noise-glasses.

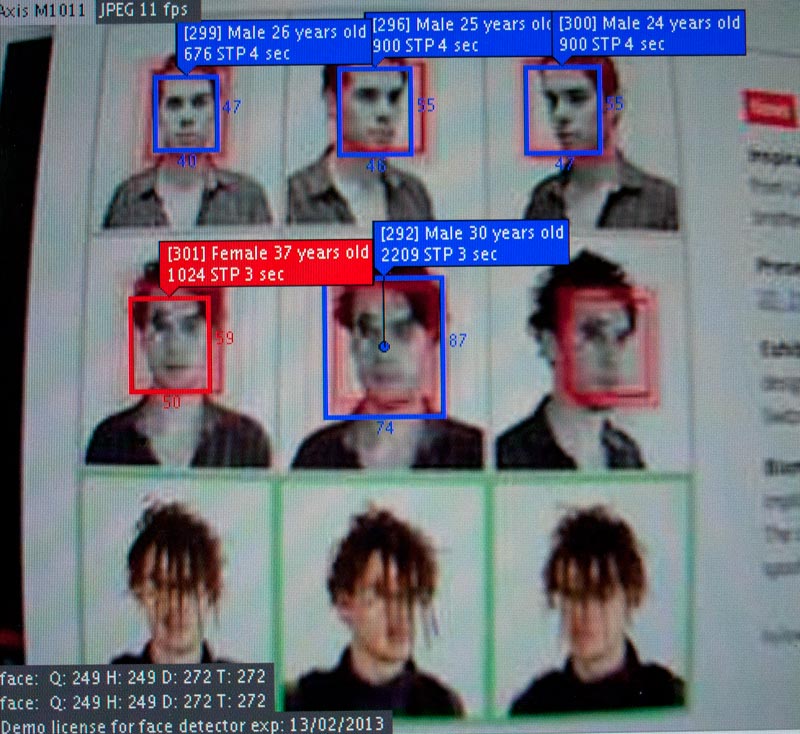

First test: CV Dazzle project. The artist says the make-up and hairdo effectively stops software from recognizing faces. Let’s see… we pointed our IP-cam at a computer screen, the easiest way to get images from one system into another.

- CV-Dazzle make-up working as expected. The bottom row of faces is not recognized.

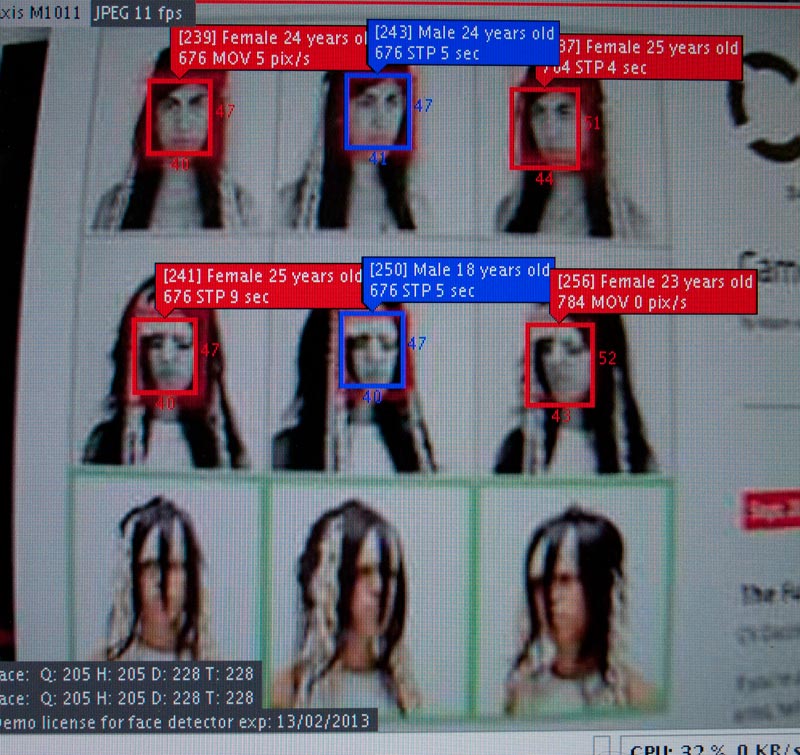

Same here.

- Oops. The algorithm got gender and age wrong, but hey. Not too bad!

- It also seems to be learning as we go.

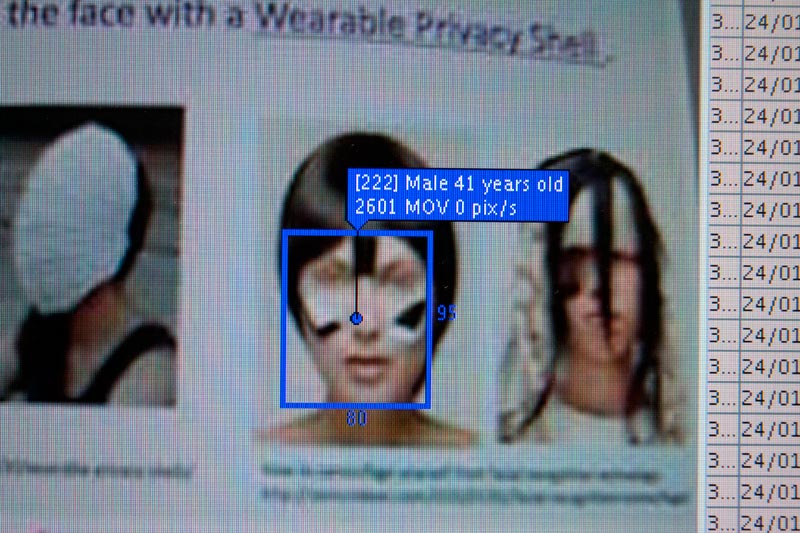

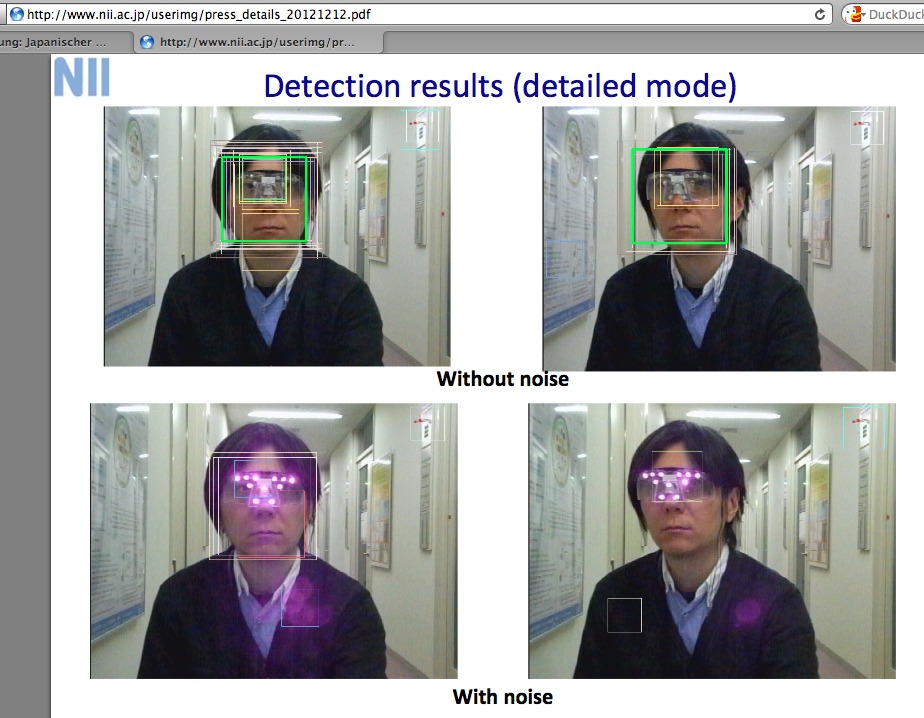

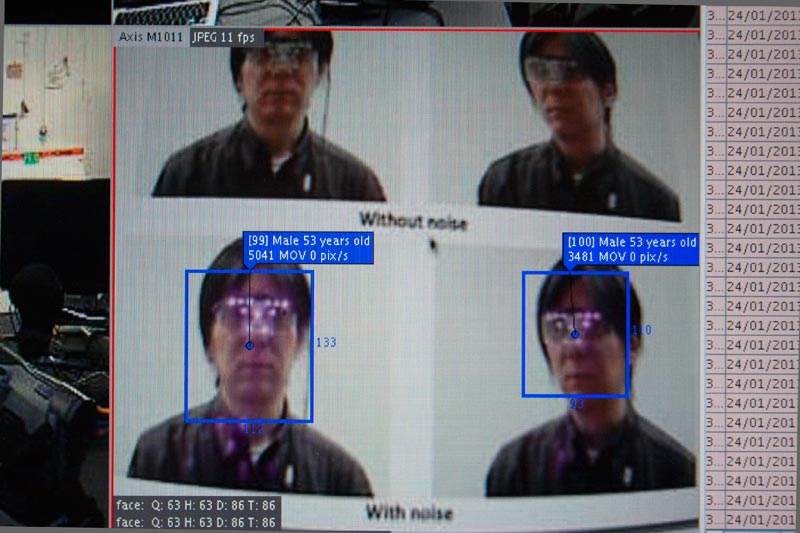

Next test: Isao Echizen’s modified sun glasses:

The project is called “Privacy Protection Techniques Using Differences in Human and Device Sensitivity”. If I understand it correctly, infra-red light (invisible to the human eye) in the eye-to-nose bridge region in the face is meant to irritate face recognition algorithms.

Again, we pointed our IP-cam at a computer screen.

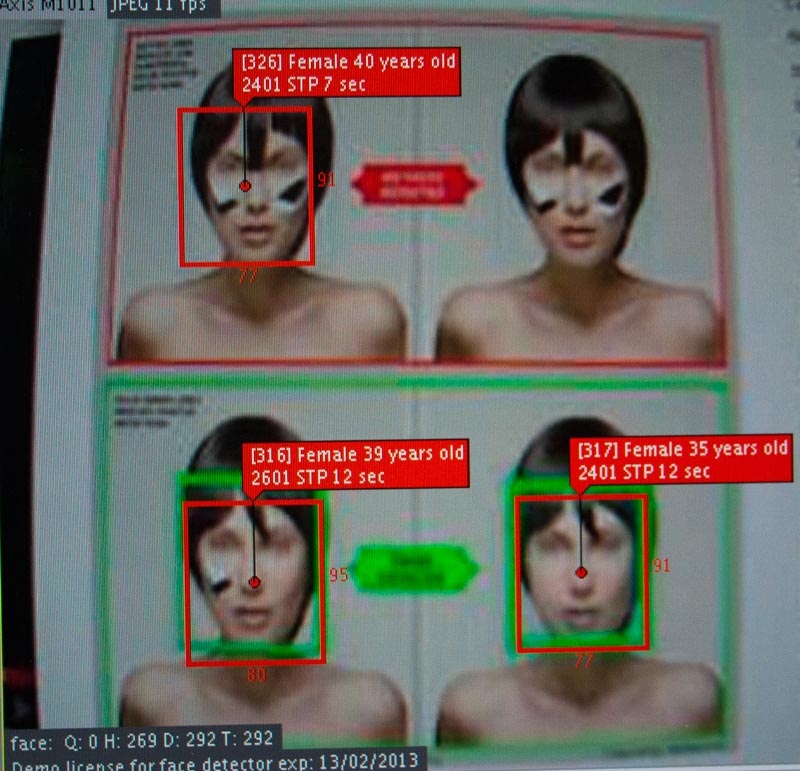

- Bingo! Even if we take into account that a video of pink-ish light on a computer screen isn’t the same as actual IR light, it doesn’t seem to work too well…