First tests confirm safety and security of video seminars. To be continued.

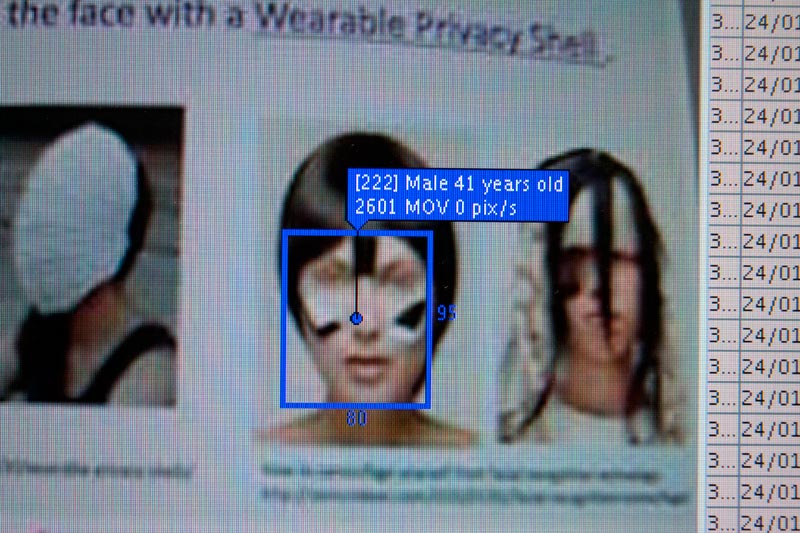

January 2015 – peak trust in IOT/ home surveillance?

Just in case you’re wondering if we’re really f***ed. Bruce Schneier on the wide range of uses for facial recognition technology that’s becoming more and more perfect.

Just a thought, regarding the representative function of portraits. The change is especially visible in ruler’s portraits. Take e.g. the Doge of Venice by Bellini, 1501:

Presumably as with other portraits of this kind, copies were put up in official buildings all around the country. So that the Doge would always be present. This way, one person can be in many places at once. And exercise authority.

Nowadays this, too, has been democraticized. With the automatic synchronization of biometric face databases, we’re all everywhere, anytime. Except that the power relations have changed, too. It’s pictures of those that need to be controlled and disciplined, that are being exchanged.

Those pictures are normally not put up on a wall (except maybe when you’re on the most wanted list). So what is it that happens exactly, when no human is looking at those portraits, but algorithms. What is being done to the representation of you?

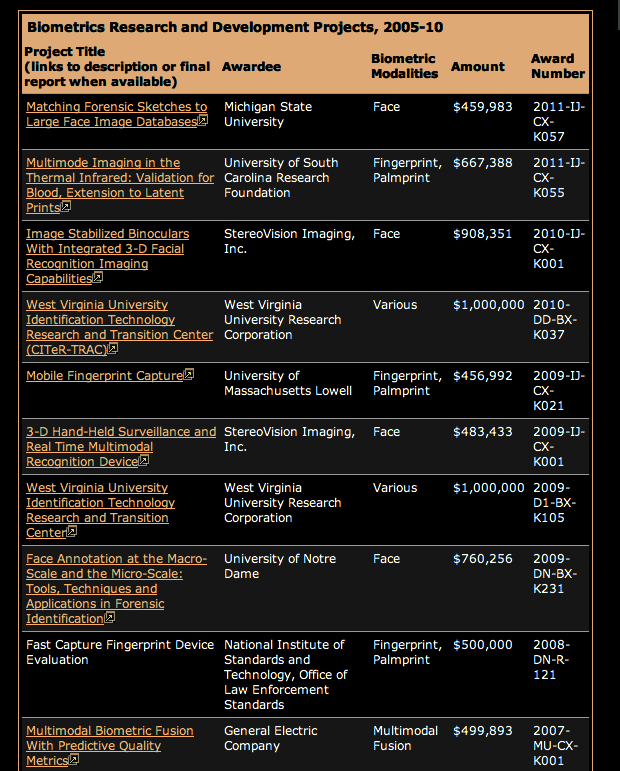

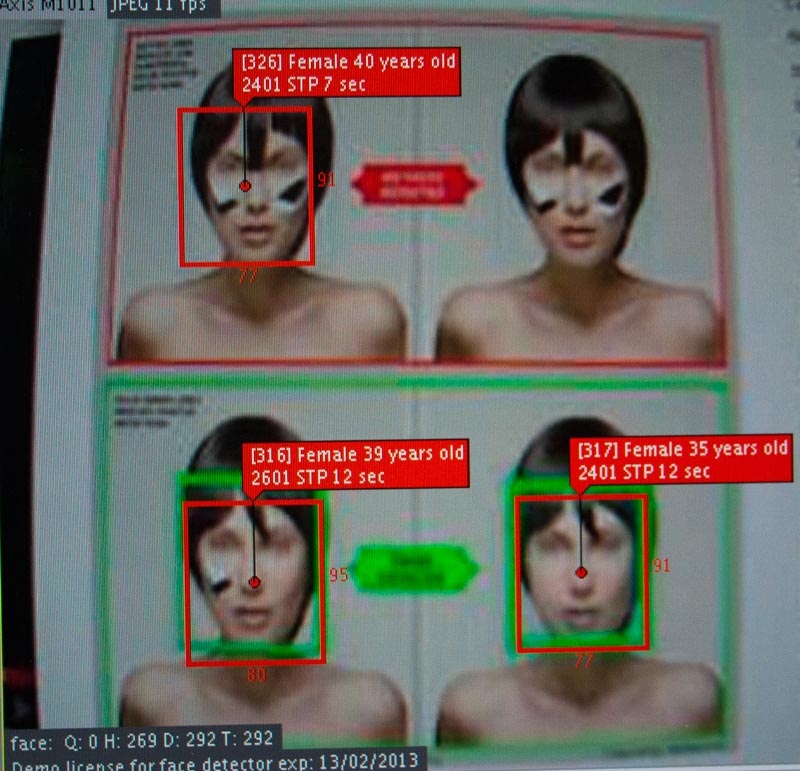

A still from the video below. The amazing bit about this is not that it’s there, and apparently working, but how incredibly crude it looks. The analysis meta-data drawing (if that’s what you can call the lines drawn onto the faces) look like a child’s drawing of a face, unable to display any empathy, individuality, attitude. It works very well with the actors trying to do a blank Buster Keaton face. I wonder why, and what this tells me.

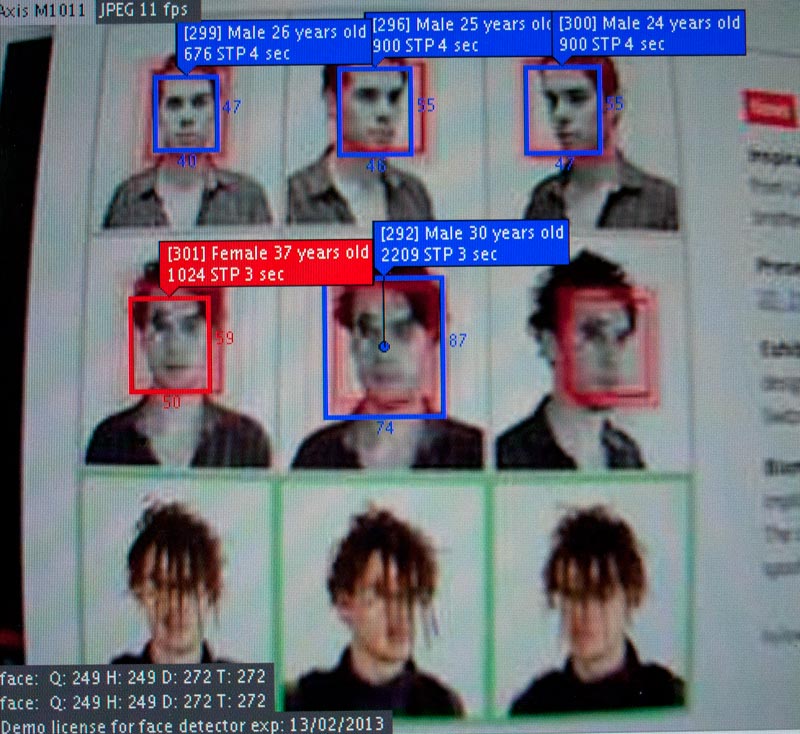

We had some fun at my ‘Raumkontrolle’ block seminar, trying out our Netavis Observer ‘face recognition’ software on various anti-Anti-Facial Recognition strategies. Seems they all used the same (Open-CV?) algorithm, and while I’m sure these strategies work against that, our (not very reliable) software looked right through the make-up and noise-glasses.

First test: CV Dazzle project. The artist says the make-up and hairdo effectively stops software from recognizing faces. Let’s see… we pointed our IP-cam at a computer screen, the easiest way to get images from one system into another.

Same here.

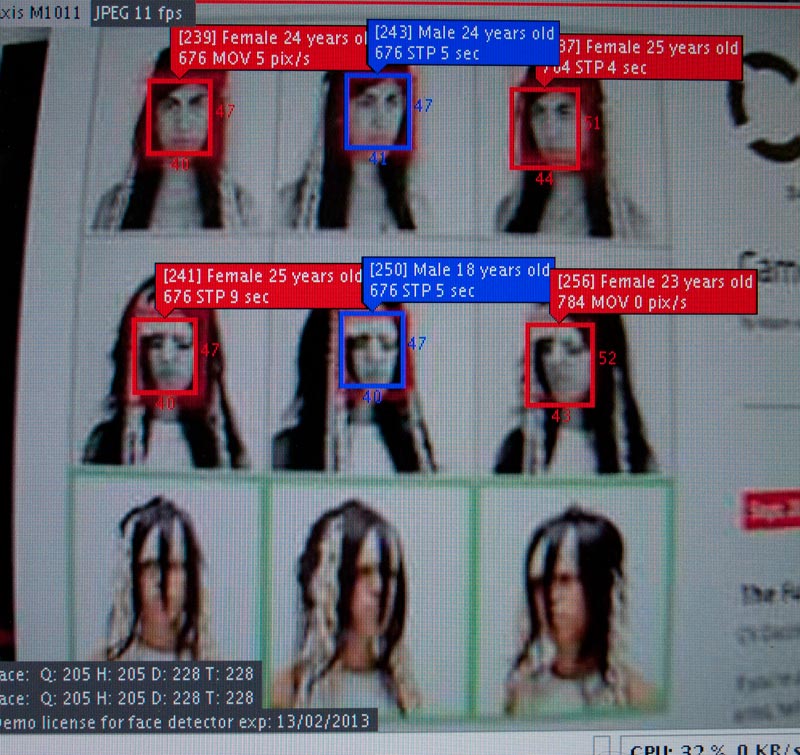

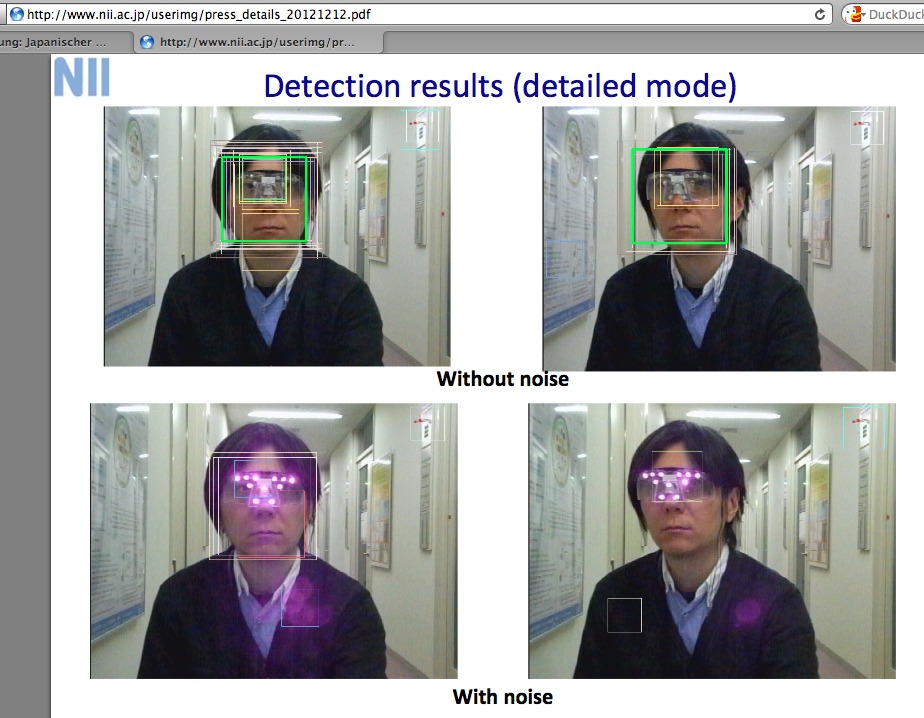

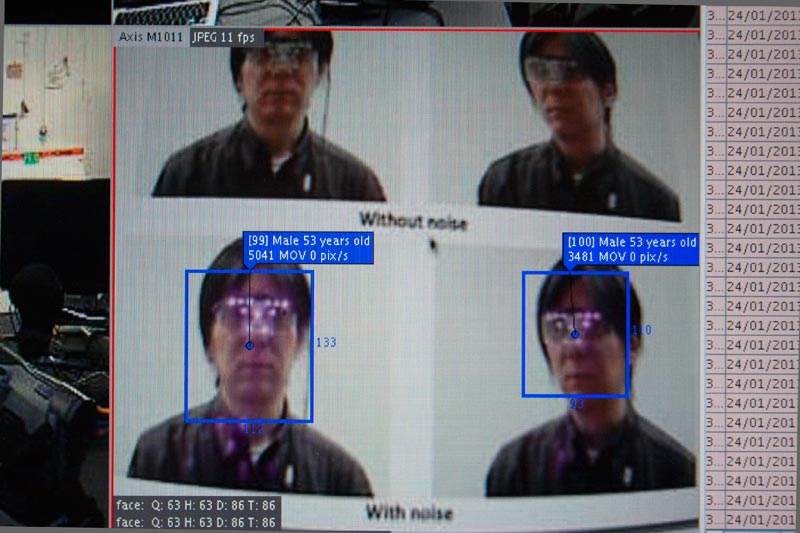

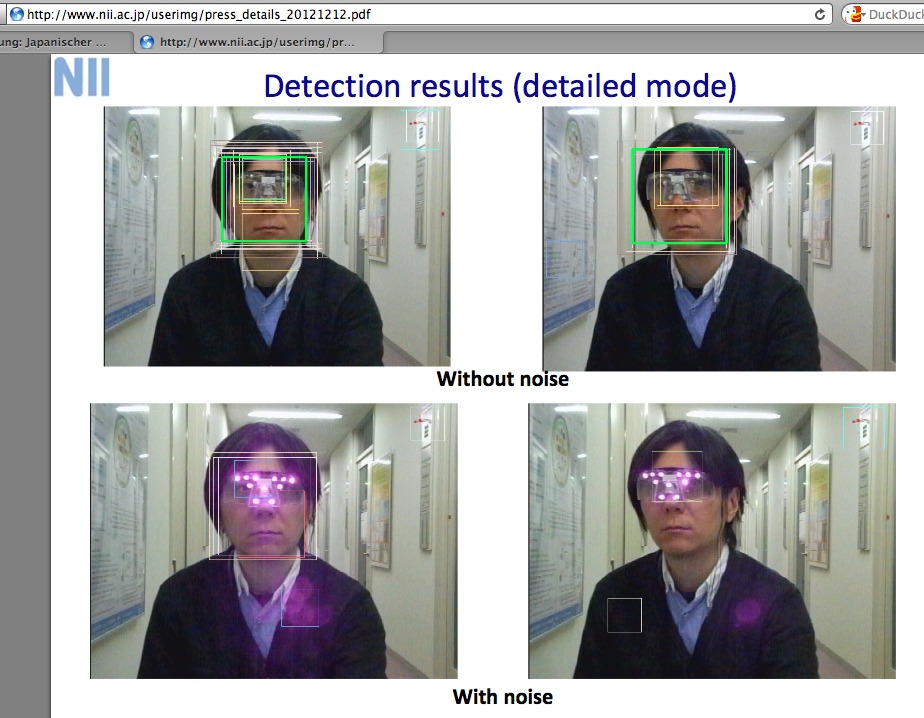

Next test: Isao Echizen’s modified sun glasses:

The project is called “Privacy Protection Techniques Using Differences in Human and Device Sensitivity”. If I understand it correctly, infra-red light (invisible to the human eye) in the eye-to-nose bridge region in the face is meant to irritate face recognition algorithms.

Again, we pointed our IP-cam at a computer screen.

We talked about Adam Harvey’s CV Dazzle project. Here is another idea, this time using LEDs to emit infrared light, invisible to the human eye, but captured by photo sensors. The infrared light works as noise, blocking that essential eye-to-nose bridge that facial recognition algorithms rely on.

Article in German: http://www.spiegel.de/netzwelt/gadgets/gesichtserkennung-japanischer-forscher-entwickelt-tarn-brille-a-879251.html

Update: Doesn’t seem to work!

Binoculars with embedded facial recognition. http://www.privacysos.org/node/928